Information Technology Services (ITS)

Use cases for a generative AI-enabled future

Published on: February 16, 2024

At the ITS all-staff meeting on Jan. 30, attendees were asked to generate use cases for an AI-enabled future. In addition to naming their hypothetical generative AI tool and describing its function, they were also asked to identify opportunities and risks. Following lively discussion at almost 30 table groups, the following are a few examples of the many creative outputs from this activity:

- Know-it-all: Smart solution search: Search function that uses AI to produce documents, locate resources, find solutions, etc. based on all tools that a given team uses.

- TLDR: Identifies an issue and generates a concise summary of its findings, populating with relevant information reported concisely.

- Class Act: Classifies emergency situations, helps with triaging incident and pre-emergency response.

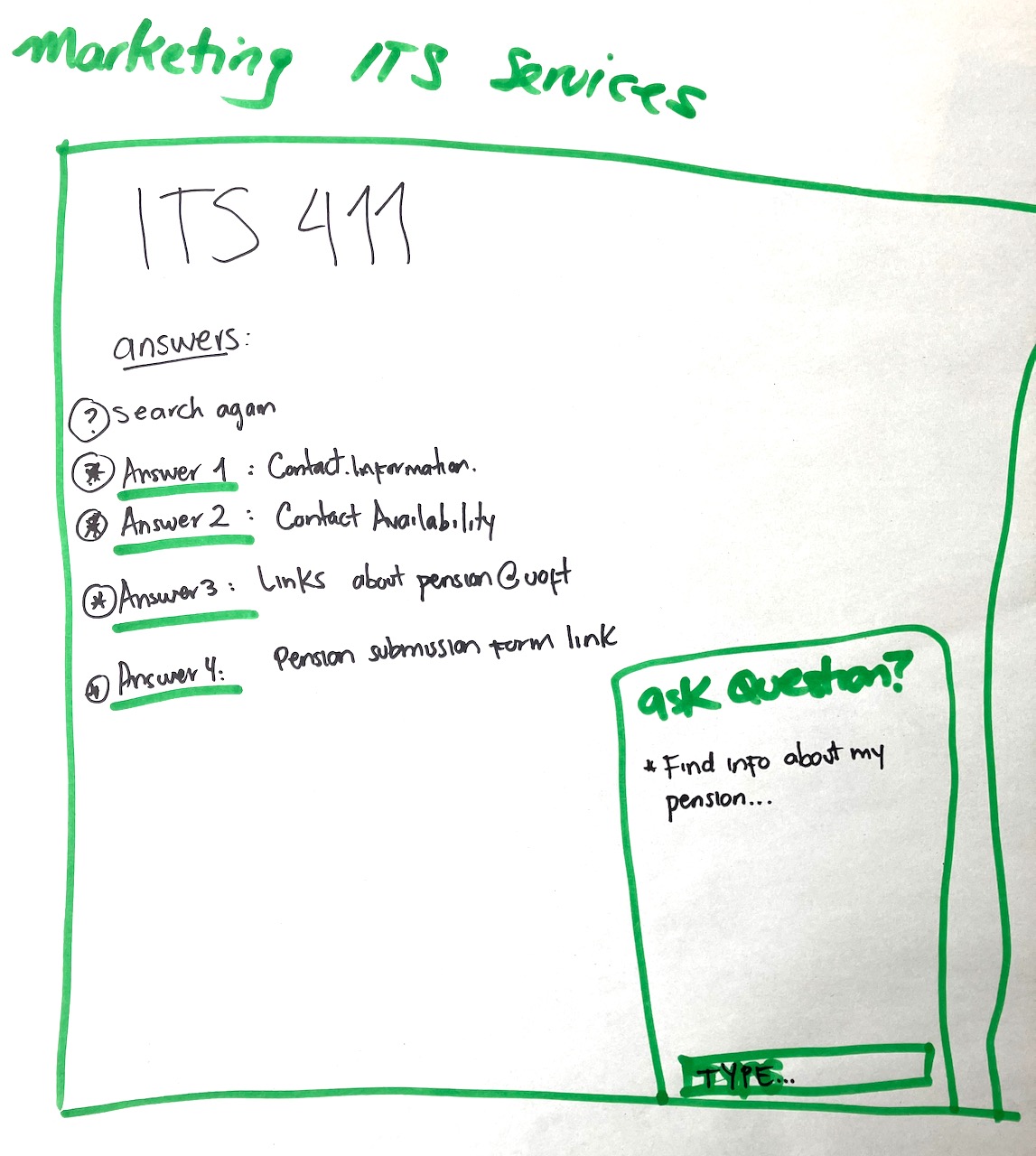

- Smart Coder: Smart assistant that does analysis of the code and provides recommendations to improve code using natural language.

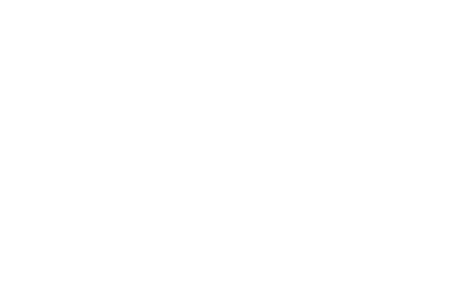

- Ask IT at U of T: Will automate current University Service Catalogue, helping to identify the service and help needed to complete the service.

- UTORbus: Translates proposed project into a professional business plan, scope of work and estimated timeline based on training data from past projects.

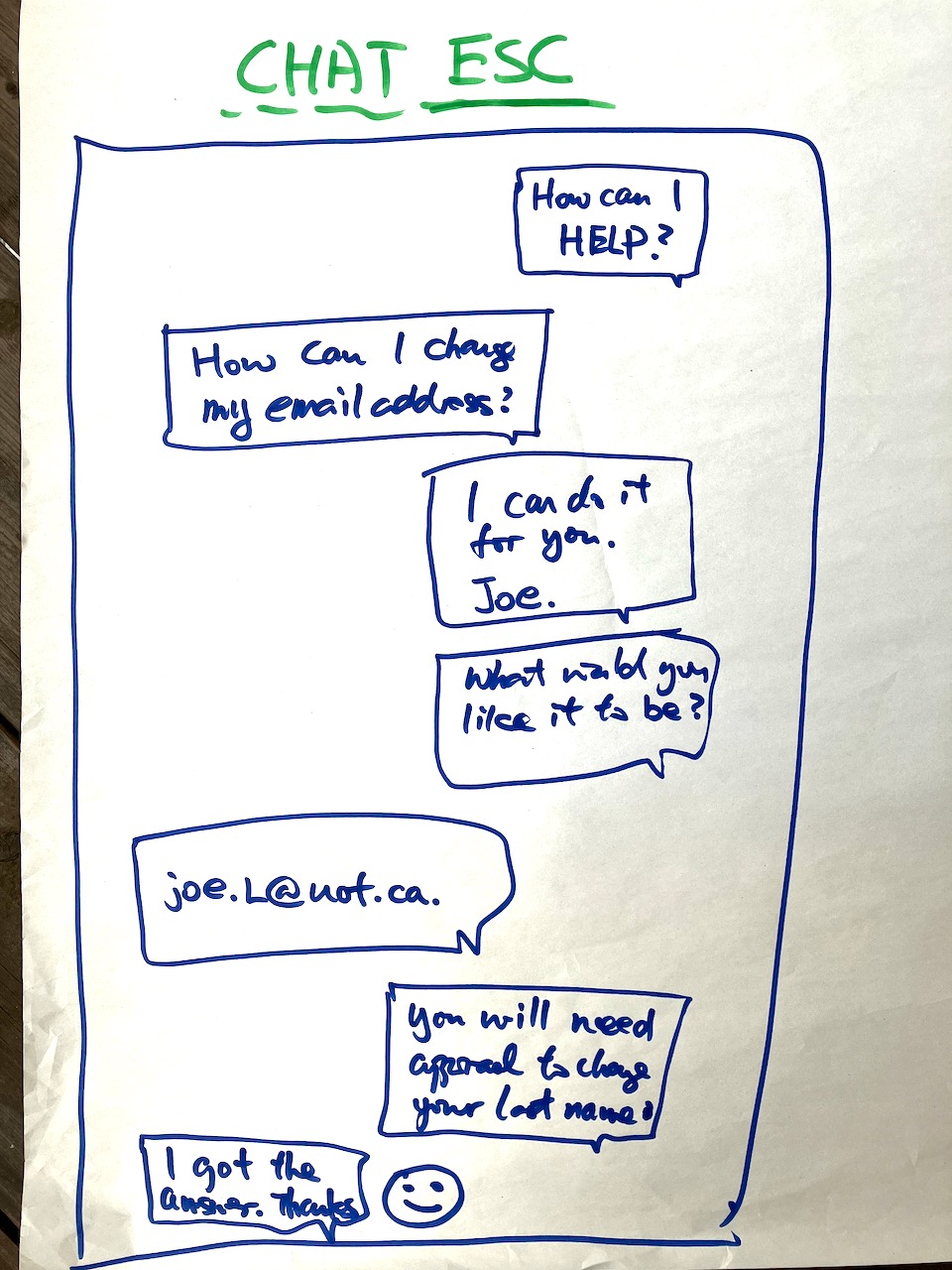

Here are some interface mock-up drawings the teams provided for their tools:

Based on the input from the ITS group activity, here are examples of five opportunities* for the use of generative AI in a university IT context:

- Save time: Streamline services to reduce response times to expedite basic support and to allow staff to focus on resolving complex issues.

- Empower users: Enable users to independently troubleshoot and solve their own problems.

- Increase efficiency: Automate tasks, provide instant responses and streamline workflows.

- Optimize planning: Provide accurate estimates, enhance planning processes and mitigate integration risks.

- Enhance content generation: Summarize meetings, remove irrelevant information and generate new ideas, improving overall productivity and effectiveness.

Based on the input provided by ITS group activity, below are examples of five risks* associated with the use in a university IT context:

- Dependence on provided information: AI’s effectiveness is limited by the quality and accuracy of the information it receives, leading to potential errors or biases for which the University may be liable.

- Bias and incorrect classifications: AI systems may exhibit biases or inaccuracies, resulting in incorrect classifications or prioritizations.

- Privacy concerns: Usage of AI may compromise privacy through data exposure or misuse, leading to privacy breaches.

- Over-reliance and skill loss: Over-reliance on AI may diminish critical thinking skills and human oversight, leading to reduced adaptability and quality assurance.

- Integration and compatibility issues: Integrating AI systems with existing infrastructure may pose challenges, leading to inefficiencies or security vulnerabilities.

*Examples of opportunities and risks were generated through ChatGPT analysis of group activity outputs.